Customer personas are a great way to market your products to your persona. Visitors on your website that match your customer persona are the right fit for your brand. With the advantage of personalization from the collected data of the specific visitor, you can send them the correct message at the exact time for maximum chances of conversion. You cannot wholly rely on customer persona to personalize your conversation with your customers. Irrespective of how much data you collect for personalizing your conversation, you don’t know your customers on a personal level.

A/B email testing to the rescue

To move further with sending the correct personalized experience to your customers, the need for A/B testing your emails communications comes into the picture. It involves making an educated guess by sending two variations of your email to two groups of the same sample size from your mailing list and selecting the winner based on predetermined goals. The variation can be in any of the following elements:

- Subject line

- Headline

- Body copy

- Call to action

- The email layout

- Personalization Level

- Images

- Offers

- Call to action copy

- Sending Times

Thankfully, conducting an A/B testing for your email campaigns doesn’t involve sending two separate emails. Most modern ESPs have the feature built-in, but you need to keep a few things in mind while conducting A/B email testing.

What to follow when A/B testing?

One of the most common reasons stated by email marketers is that they don’t know how to start with their A/B testing their email campaigns. The following is some of the familiar things you need to follow during A/B testing.

Have a solid theory to back your A/B needs up

Now that you are interested in improving your email campaign performance by A/B testing, it is essential to have a theory to back your needs. Having no strategy for A/B testing your emails is like driving a sports bike but no knowledge of driving one… you’ll crash and abandon it forever. The best place to start is understanding what do you want to achieve from your test? Increase the open rate? More conversions? Lesser unsubscribes?

Based on what goal you select, you follow the general method of approach:

Observation of the issue → Possible reason for the problem → Suggested fix → Measurement of the result

Assign the testing email element to the related metric

Once you have finalized on one goal for your A/B test, you move ahead in identifying which email elements affect the specific metric associated with the determined goal. Do you want to increase the open rate? You need to focus on subject lines, preview text, send timings. For click rates, you focus on the hero image, call-to-action placement, offer type, body copy. For conversions, the type of personalization, headline copy, or the call-to-action copy could be at fault.

If your goal is concrete, then you may not find any trouble in identifying the correct email element.

Test periodically to eliminate the novelty factor

A single A/B test would not be the single silver bullet that increases your conversions. When you introduce something new in your email, your subscribers might find it out of the ordinary, and you may observe a spike in the results. This reaction you might get would be due to the novelty and not for innovation. Periodically repeating the A/B test for the same element to get a result that is free of any novelty.

In the longer run, to confirm the effectiveness of the goals you set, it is a good practice to conduct the A/B test twice or thrice to eliminate any outside factors.

A/B testing can also have a third alternative

A/B testing shouldn’t necessarily mean testing two variations of the same email element. Provided that your mailing list is capable of being split into more than 3 chunks, you can test more variations of the same email elements. In the case of call-to-action button placement, one variation can have it in the first fold, the second variation can have it at the footer and the third variation can it after in the middle of the email after the offer pitch. While testing the effectiveness of the call-to-action colors, send a text link to one segment of your audience instead of the call-to-action button.

A/B testing doesn’t always need to be testing two variations of the same element, be innovative about it.

Optimize for the devices your customers use

Your marketing efforts need to be in the direction of where your customers are. A peek into the metrics of your email campaign can offer a plethora of information about A/B testing which elements would aid better. One such metric to examine is the device and email client that a majority of your subscribers use to read your emails. It helps you optimize your emails based on the majorly used devices. Gmail users can experiment with AMP emails that other email clients may not support. To read more about it, click here.

Test between two similar sections of the audience

A/B test results are only valuable if you are testing between two similar sections. A/B testing between a segment of newly joined leads and the customers that are buying since past year would not give any information that you can use to optimize your emails. To get an even baseline across both variants, you need to test your emails across two similar sections of the audience.

The most common segments are:

- Returning visitors

- New visitors

- Visitors of the same location

- Visitors of common traffic sources

- Different devices

- Different email clients

While A/B testing your email campaign, it is easy to sidetrack and do more damage than improvements to your email campaign. Most of the mistakes happen from incomplete to incorrect knowledge and life is too short for making mistakes and learning from it. So, we have listed some of the commonly committed A/B testing mistakes done by marketers.

What to avoid when A/B testing?

Comparing apples to oranges

A/B email testing always bring forth a trait in your emails that your subscribers prefer more than what you currently provide them, increasing the conversion chances. The level of changes observed in the conversion varies from company to company and also on the strategies implemented. Marketers, in the starting years, tend to compare their email performance with results of peers or the ones specified in some case studies and are dejected not to reach the same level.

What these people miss out is the fact that the conversion rate achieved by someone is different as everyone has a different marketing style. Also, the conversation you have with your subscribers and on your email design impacts the conversion rate.

Not having a baseline to compare

The purpose of conducting an A/B test is to compare the results of any innovation you include in your email campaigns with the baseline set from past campaigns. If you don’t have a comparison baseline, you are more prone to put all the efforts down the drain. Additionally, by not setting a baseline for comparison, you are more prone to committing the mistake mentioned above.

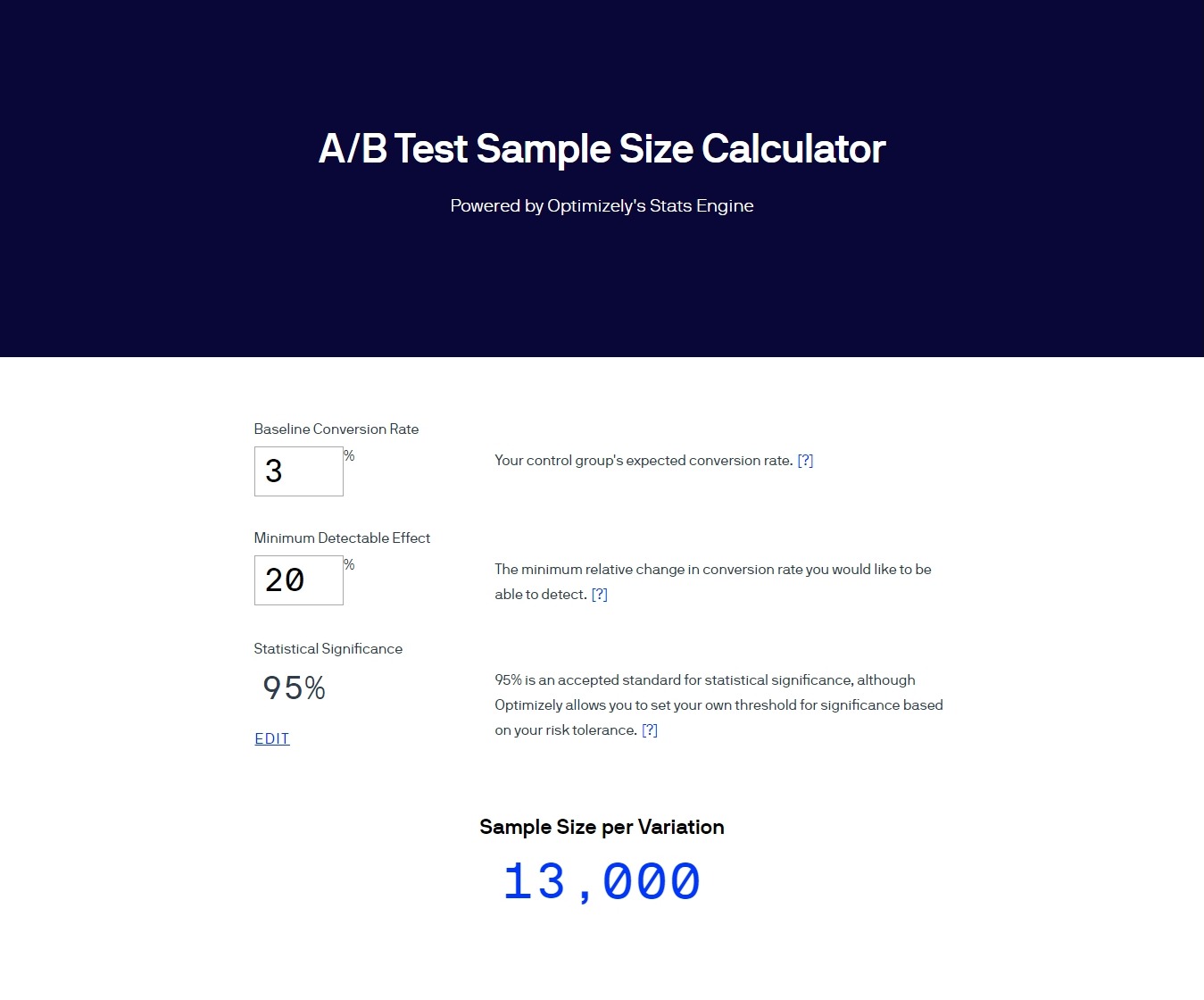

Sample size can be arbitrary

One of the rookie mistakes marketers tend to commit is not having a large enough sample size to fully understand the effects of your A/B testing efforts. The more significant is the sample size, more is the accuracy in finding any changes in the behavior of your subscribers. The sample size can be calculated based on 3 factors:

- Expected conversion rate

- Minimum detectable change in conversion rate

- How statistically significant should your results be

Sites like Optimizely, Kissmetrics have online tools you can use to calculate an approximate sample size for your A/B email testing.

Running too many A/B test at once

Imagine your email to be a big wall of ever-changing blobs of color. You are tasked to find the pattern in which the colors are changing. If you try to watch the wall as a whole, you may be confused by the amount to focus. On the other hand, if you focus on one specific section of the wall, you are more easily able to track the color change pattern.

Similarly, whenever you conduct A/B testing of your emails, it is mandatory to focus on one single element. Otherwise, you may not be able to place a finger on which element change brought forth the improvement.

Changing parameters in mid-testing

While creating a theory to back your A/B testing purposes, it is vital to have a goal about what you wish to aim from it. Some marketers tend to deviate from this goal when they don’t notice any progress mid-test. Never do so. Whenever you conduct an A/B email testing, it is essential to remember that the results take time to stabilize. If you change your goal or the parameters for measuring the goal mid-test, you need to conduct the test again from the start to get the correct results.

Not testing automated emails

For most automated emails, marketers assume that you can forget about them once you have set-up the email automation workflow. As times change, the email that you set a year ago may need tweaking to continue being productive. By not conducting A/B testing on your automation email, you are avoiding a vast chunk of emails that your subscribers tend to interact with.

Denying the result you get, for the result you expect

Similar to what we talked earlier about comparing results with others, email marketers tend to aim higher and get disappointed on missing the target. While it is good to have high expectations, but it needs to be realistic. For example, if your current open rates are 22%, you cannot expect it to rise to 40% on A/B testing alone. If you have created a baseline of your existing email campaign performance, you may avoid this mistake from happening.

Following the herd

You should A/B test your emails, not because everyone does it. You should do it because you believe your emails have a scope for improvement. By following the herd, you fail to understand the purpose and end up with disappointment.

Making drastic changes to your emails

Unless you are testing the email layout, your subscribers shouldn’t notice any significant difference in your emails as it is unexpected for them. It brings forth confusion and suspicion of spam and phishing emails. The elements you test in your emails need to be subtle enough to avoid getting adverse reactions from your subscribers.

A/B testing ideas to test in your next email campaign

Long v/s Short Subject Lines

The subject line is the first interaction your subscriber has with your email. It needs to be engaging enough to motivate the subscriber to open the email. Having a longer subject line has its own challenges as it gets clipped at different lengths for different email clients. The character count for a subject line should be ~35, yet your subscriber may be ready to read subject lines more extended than that. Experiment by A/B testing between two different subject line length

High emotional value Subject lines vs. low

The message in your email subject line is essential, but more important is how to communicate it. The emotions you convey in your subject line impact the conversion significantly. The emotional value of your subject line can be divided into 3 types:

- Intellectual: Words that trigger a sense of reasoning and evaluation

- Empathetic: Words that trigger a positive emotional reaction to the problem faced

- Spiritual: Words that trigger the deep emotional level of a subscriber

Emoji in your subject line

In the modern world of having expressions for all kinds of situations, emojis are enhancing the conversations we have. Even then, emojis cannot be used at every opportunity, and your subscribers’ reception to emoji can be analyzed using A/B testing it for a small sample. The reason can vary from email client support, brand personality, level of comfort, type of business model (B2B or B2C), as well as personal preferences.

Experiment with email copy approach

The tone of your email copy matters a lot in engaging your customers. If you observe decent open rates, but your click rates are comparatively low, then you need to rework the approach of your email copy. There are multiple ways to include persuasion and influence that draws your subscribers to read further. Some of the most common approaches when it comes to email marketing are:

Before-Bridge-After: This email copy style shows the problem that your customer relates with. You follow-up with the solution that you used and finally end with the current situation which the subscriber aims to reach.

Pain-Agitation-Solution: This type of email copy style is a direct approach of the pain points of your customer and agitate the pain by stating the thought of your customer in your words and then provide your solution that would eliminate the pain point.

AIDA Method: This is a prevalent marketing strategy where you draw Attention to the issue at hand, express your Interest in helping them, build their Desire of not missing out and make them take Action on completing reading your email.

Best Practices

Here are a few best practices to keep in mind when running an email A/B test:

- Keep your sample as large as possible for an accurate result

- Your gut may wish to go in a direction that is contradictory to what the collected data. Avoid it.

- Test your email campaign frequently for uniform result free from novelty

- Always test one email element at a time